ASP.NET Support Voice Column

Log Parser 2.2 and ASP.NET

To customize this column to your needs, we want to invite you to submit your ideas about topics that interest you and issues that you want to see addressed in future Knowledge Base articles and Support Voice columns. You can submit your ideas and feedback using the Ask For It form. There's also a link to the form at the bottom of this column.

Welcome to the ASP.NET Support Voice Column. My name is Rahul Soni, and I have been with Microsoft for about two years now. In this month's column, I will discuss some of the facts about Log Parser 2.2. Before we go into specific scenarios, let me take a moment to describe the Log Parser tool.

About

Log Parser is a very powerful, versatile tool that provides universal query access to text-based data, such as log files, XML files, and CSV files, as well as key data sources on the Microsoft Windows operating system, such as the event log, the registry, the file system, and the Active Directory directory service. Please

click here to download the tool. In this version, you can parse the following files very easily from the Log Parser tool:

IISW3C, NCSA, IIS, IISODBC, BIN, IISMSID, HTTPERR, URLSCAN, CSV, TSV, W3C, XML, EVT, ETW, NETMON, REG, ADS, TEXTLINE, TEXTWORD, FS and COM.I agree that the command-line interface is not very intuitive, and you will find it out as soon as you install and run the tool. But what the tool lacks in intuitive feeling is being patched up by the documentation provided for the tool. I'll give a small piece of advice here: Don't let the command-line interface put you off so soon. This tool is a must in a geek's toolkit and helps you a lot, especially if you want to troubleshoot from a performance perspective.

I am not going to discuss "how to" use the Log Parser tool because the documentation is quite good and the installation folder also contains a "Samples\Queries" folder that contains quite a few samples.

So, after I am done with my bit of promotion of the Log Parser tool, let me get back to the scenarios I was talking about.

Scenario 1: Parsing large text files for a specific text

A small background of the problem

Your customer experiences an "Access Denied" issue when they did something. You recommend that the customer use another brilliant tool that is named

Filemon and reproduce the issue. If it is an issue with resource ACLs, the Filemon tool will be able to catch the error. Now, you ask the customer to send you the saved Filemon log file. Here comes the unfortunate part. You get the file (say, Filemon.log) but find that the size is huge (Filemon does log a lot of data!). Notepad will appear to hang and will be painfully slow to find the "Access Denied" lines in the log file. Microsoft Office Excel will refuse to open the file completely. Now what?

Answer: Open the Log Parser command window, and use the following command:

LOGPARSER "Select Text from C:\Filemon.log where Text like '%Access Denied%'" -i:TEXTLINE -q:OffWhat we are telling the Log Parser tool is to parse through each line (Text) from the given file (C:\Filemon.log) where the line contains 'Access Denied'. The -i:TEXTLINE command-line switch specifies the input format, and the -q:Off command-line switch tells it to be verbose (-q[:ON|OFF]:quiet mode;). If you turn the -q command-line switch on, the statistics shown and the field name (Text) in the output below will be absent.

Sample output

Text7447 1:49:24 PM explorer.exe:1200 DIRECTORY C:\ Access Denied StatisticsElements processed: 640444

Elements output: 1

Execution time: 12.75 seconds

How to avoid pressing ENTER multiple times if the number of records returned by your query is larger than 10?

Answer: Use the -rtp:-1 parameter in your queries!

This will be a necessary parameter in case you want to redirect the output into a file. Also, when you write to STDOUT, output records are displayed in batches made up of a number of rows equal to the value specified for this parameter. Once a batch of rows has been displayed, it will prompt the user to press a key to display the next batch of rows. Specifying "-1" for this parameter disables batching altogether!

Using query files

Another way to achieve the same results in a cleaner way is to create a query file. This way, you can easily tweak your query file and run it from the Log Parser tool's command line. Apart from that, you can easily create a GUI according to your taste. The GUI loads the saved SQL query and runs the query by using the Log Parser tool.

If you want to achieve the same effect (as in Scenario 1) from SQL queries, you can provide the following command:

LOGPARSER -i:TEXTLINE file:C:\LPQ\SearchAnyTextfile.sql -q:offC:\LPQ\SearchAnyTextFile.sql contains the following information:

Note Create a folder LPQ in your C:\ folder to use the samples shown in this column.

Select Text as LineFromFile

FROM C:\Filemon.log

WHERE Text

LIKE '%Access Denied%'

If you notice, the query looks much cleaner now and makes more sense. This way, you can create more complex and larger queries as well, and everything will fit on your command line because you are using the .SQL file instead of the whole query. It is not possible to fit more than 260 characters on the command line anyways!

Keeping the benefits of using query files, I will use this method in the following scenarios. I have all my queries saved in C:\LPQ with a .sql extension (you can use your own).

Scenario 2: Finding the 10 largest files from a specific folder, including its subfolders

A small background of the problem

You have a folder, and there are quite a few subfolders and files in it. You want to find out the top 10 largest files in that folder, including its subfolders.

I know, for a specific folder, you can simply change the view (on the View menu, click Details) in Windows Explorer and sort the view by size. But the problem here is that you need to account for the subfolder's contents as well.

Answer: Open the Log Parser tool command window, and use the following command:

LOGPARSER -i:FS file:C:\LPQ\Top10Files.sql -q:off -Recurse:-1Top10Files.sql contains the following:

SELECT TOP 10

Path,

Name,

Size,

Attributes

FROM 'C:\Program Files\Microsoft Office\*.*'

ORDER BY Size DESC

Here -i:FS signifies that we are querying the file system. You can view the complete list of FS input format fields in the documentation and frame your query accordingly.

-Recurse:-1 implies that we want to include all the subfolders. If you don't want all the subfolders or want to limit recursion, use 0, 1, 2, etc. The number implies the depth the parser will go into. 0 means no recursion, 2 means that the parser recur until depth 2, etc.

Sample output

Path Name Size Attributes

------------------------------------------------------------ ------------ -------- ----------

C:\Program Files\Microsoft Office\Office10\WINWORD.EXE WINWORD.EXE 10738448 -A--R----

C:\Program Files\Microsoft Office\Office10\EXCEL.EXE EXCEL.EXE 9358096 -A--R----

C:\Program Files\Microsoft Office\Office10\OUTLLIB.DLL OUTLLIB.DLL 6326984 -A-------

C:\Program Files\Microsoft Office\Office10\POWERPNT.EXE POWERPNT.EXE 6093584 -A--R----

C:\Program Files\Microsoft Office\Office10\MSOWC.DLL MSOWC.DLL 3041880 -A-------

C:\Program Files\Microsoft Office\Office10\CLIPPIT.ACS CLIPPIT.ACS 2904417 -A-------

C:\Program Files\Microsoft Office\Office10\GRAPH.EXE GRAPH.EXE 2144824 -A-------

C:\Program Files\Microsoft Office\Office10\1033\OUTLLIBR.DLL OUTLLIBR.DLL 1977032 -A-------

C:\Program Files\Microsoft Office\Office10\1033\XLMAIN10.CHM XLMAIN10.CHM 1646072 -A-------

C:\Program Files\Microsoft Office\Office10\MSOWCW.DLL MSOWCW.DLL 1200177 -A-------

StatisticsElements processed: 1000

Elements output: 10

Execution time: 0.42 seconds

Scenario 3: Finding the 20 slowest pages in your Web site

A small background of the problem

You are using Microsoft Internet Information Services (IIS) 6, have a few ASP.NET Web sites, and are not really happy with the performance. You want to tweak the server and know about the top 20 pages that are taking the highest time to serve from the Web server. It is not as if you determine the highest time-taking pages, fix it, and your issues are resolved. Unfortunately, there are no silver bullets to resolve performance issues. At least there should be a way to start. Correct?

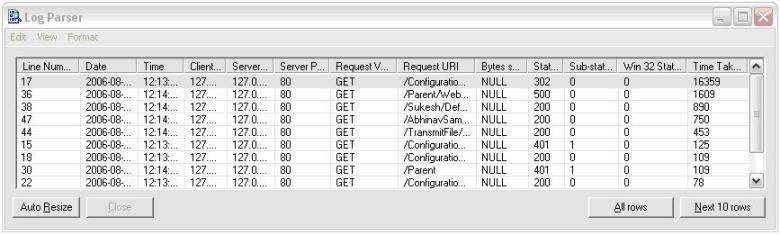

Answer: Open the Log Parser command window, and use the following command:

LOGPARSER -i:IISW3C file:C:\LPQ\Slowest20FilesInIIS.sql -o:DataGrid -q:offSlowest20FilesInIIS.sql contains the following example code.

Select Top 20

LogRow as [Line Number],

date as [Date],

time as [Time],

c-ip as [Client-IP],

s-ip as [Server IP],

s-port as [Server Port],

cs-method as [Request Verb],

cs-uri-stem as [Request URI],

sc-bytes as [Bytes sent],

sc-status as [Status],

sc-substatus as [Sub-status],

sc-win32-status as [Win 32 Status],

time-taken as [Time Taken]

From

C:\ex060813.log

Order by time-taken desc

Here, -i:IISW3C signifies that we are querying the IIS W3C logs. You can view the complete list of IISW3C input format fields in the documentation and frame your query accordingly.

-o:DataGrid implies that the output should be shown in a data grid as follows:

Note To use this query, you should be using IISW3C Logging and must have enabled Advanced Logging properties. (Open your Web site properties, click the Web Site tab, click to select Enable Logging, and then set the Active log format as W3C Extended Log File Format. Click Properties, click the

Advanced tab, and then click to select all the options.)

Scenario 4: Finding the 20 most commonly used .aspx pages in your Web site

A small background of the problem

You are using IIS 6, have a few ASP.NET Web sites, and want to know the most commonly used .aspx files so that if you tune the performance of those pages, eventually the whole Web site/server benefits. It is always better to spend time tweaking the most-used pages rather than the pages that are used sparingly. (Although there could be exceptions to this rule. Say the sparingly used page is a really bad page that causes high CPU utilization for that matter!) Now, if you find that there a certain page takes a long time to be served (Scenario 3) and the page is one of the most commonly used pages, you should always confirm that the page behaves well under stress.

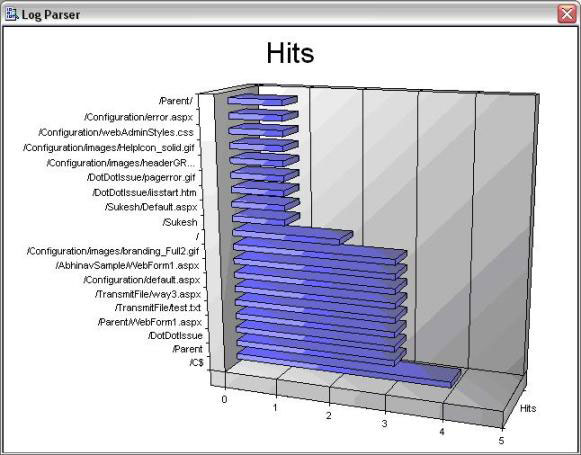

Answer: Open the Log Parser tool command window, and use the following command:

LOGPARSER -i:IISW3C file:C:\LPQ\Popular20FilesInIIS.sql -chartType:Bar3D -groupSize:640x480 -view:onPopular20FilesInIIS.sql contains the following example code.

Select Top 20

cs-uri-stem as [Request URI],

COUNT(*) AS Hits

INTO MyChart.gif

FROM C:\ex060813.log

Group by cs-uri-stem ORDER BY Hits DESC

In this case, -chartType:Bar3D tells the Log Parser tool what kind of chart to prepare. The -groupSize parameter specifies the width and height of the target image, in pixels. The set of available chart types depends on the version of the Microsoft Office Web Components installed on the local computer.

Here is how the output looks:

As you have already seen, there could be multiple ways in which the Log Parser tool could prove really helpful in analyzing the data from various logs. Only creativity is the limit here! There are almost unlimited ways in which the data could reflect a much better picture to you, and it could be something you can act upon. What we have touched is just the tip of the iceberg! You can also use high-level languages such as Microsoft Visual C#, Microsoft Visual Basic .NET, etc. to create useful programs using the power of the Log Parser tool. Check out the "Resources" section below.

Resources

-

What's new in Log Parser 2.2

http://www.microsoft.com/technet/scriptcenter/tools/logparser/lpfeatures.mspx

-

How Log Parser 2.2 works

-

Microsoft Log Parser Toolkit – a book on Log Parser!

http://www.microsoft.com/technet/scriptcenter/tools/logparser/default.mspx

I would like to discuss more scenarios for the Log Parser tool, and I have created a new section in my blog just for the Log Parser tool. If you have any suggestions for scenarios or Log Parser tool queries that you want to share, please mail me at

rahulso@microsoft.com, and I would post it at my earliest convenience at the following Web site:

https://learn.microsoft.com/archive/blogs/rahulso/

As always, feel free to submit ideas on topics you want addressed in future columns or in the Knowledge Base using the Ask For It form.

The third-party products that this article discusses are manufactured by companies that are independent of Microsoft. Microsoft makes no warranty, implied or otherwise, regarding the performance or reliability of these products.