What can Copilot do?

Microsoft 365 Copilot is your AI assistant for work that can help you with tasks like brainstorming, writing, coding, and searching. It provides intelligent suggestions and automates repetitive tasks to make your work easier. You can find Copilot Chat in the apps you use every day, like Microsoft 365, Teams, Outlook, and Edge. Copilot helps you stay connected, be creative, and more productive.

Select a heading below for more information

Copilot uses a large language model that mimics natural language based on patterns from large amounts of training data. The model is optimized for conversation by using Reinforcement Learning with Human Feedback (RLHF)—a method that uses human demonstrations to guide the model toward a desired behavior.

When you submit a text prompt to Copilot, the model generates a response by making suggestions about what text should come next in a string of words. The model is based on a domain-specific language (DSL) that allows you to specify what kind of information you want to search and synthesize from either your Microsoft 365 data or from the web. It's important to keep in mind that the system was designed to mimic natural human communication, but the output may be inaccurate or out of date.

With a Microsoft 365 Copilot license, the toggle at the top of the Copilot user interface allows you to switch between grounding your prompts in work and web data. Both modes provide generative AI services, but they serve different purposes to help you be more productive.

-

In work mode, Copilot Chat responses are grounded in work data available to you. Using the Microsoft Graph, Copilot can help you access organizational resources or content, such as documents in OneDrive, emails, or other data. This is where you can manage and complete tasks related to your work. You can draft emails, create documents, manage your calendar, and collaborate with colleagues directly from this mode.

-

In web mode, Copilot Chat is grounded in data from the public web in the Bing search index. This mode combines the power of generative AI with up-to-date information from the web. It’s useful for conducting research, finding resources, and staying updated with the latest news and trends.

The work and web modes are designed to streamline your workflow and make it easier to switch between work-based tasks and web-based information sources. Both work and web modes offer enterprise-grade security, privacy, and compliance.

Use natural language to ask Copilot questions or give instructions.

In work mode, try asking questions about your organization, for help generating content, or for a summary of a topic, document, or chat conversation. The more specific your prompt, the better the results.

Here are some work-based example prompts to help you get started:

-

Summarize unread emails from John.

-

Draft a message to my team with action items from my last meeting.

-

What’s our vacation policy?

-

Who am I meeting with tomorrow?

In web mode, get quick summaries about specific topics, analyze or compare products to make informed business decisions, create images, draft content, and learn new skills.

Here are some web-based example prompts you might want to try:

-

Give me a summary of recent news about [product].

-

Help me learn about [topic].

-

How do I set achievable goals at work?

-

How does our internal forecast compare with EPS growth in the top US public CPG companies?

Copilot Pages is available in both work mode and web mode. With a Microsoft 365 Copilot or Microsoft 365 license, access Copilot Pages—a dynamic, persistent canvas designed for multiplayer AI collaboration directly in Copilot Chat. Here’s how:

-

Select Edit in page at the bottom of a work mode response.

-

The response will open in a new page beside the chat thread.

-

You can then edit, add information, and share it.

Tip: Access previous pages in recent chats or by selecting Pages from the Microsoft 365 Copilot app nav menu.

No, you no longer need to link your personal account to your work account to use Copilot. Sign in with your work or school account in Microsoft Edge to access Copilot Chat via the Edge sidebar.

No, you cannot use Copilot with your personal and work account at the same time. To sign in with your personal or work account, sign in at m365copilot.com.

Tip: Microsoft Edge supports creating both work and personal profiles and makes it easy to have both open at the same time. See Sign in and create multiple profiles in Microsoft Edge.

To ensure quality, Copilot is given test questions, and its responses are evaluated based on criteria such as accuracy, relevance, tone, and intelligence. Those evaluation scores are then used to improve the model. It's important to keep in mind that the system was designed to mimic natural human communication, but the output may be inaccurate, incorrect, or out of date.

Providing feedback via the Thumbs Up and Thumbs Down icons will aid in teaching Copilot which responses are and are not helpful to you as a user. We will use this feedback to improve Copilot, just like we use customer feedback to improve other Microsoft 365 services and Microsoft 365 apps. We don't use this feedback to train the foundation models used by Copilot.

Customers can manage feedback through admin controls. For more information, see Manage Microsoft feedback for your organization.

Microsoft 365 Copilot presents only the data that each individual can access using the same underlying controls for data access used in other Microsoft 365 services. The permissions model within your Microsoft 365 tenant will help ensure that data will not leak between users and groups. For more information on how Microsoft protects your data, see Microsoft Privacy Statement.

Prompts and responses aren't used to train foundation models.

Microsoft 365 Copilot is built on the Microsoft comprehensive approach to security, compliance, and privacy.

For more information, refer to the following:

-

If you’re using Microsoft 365 Copilot in your organization (with your work or school account), see Data, Privacy, and Security for Microsoft 365 Copilot.

-

If you're using Microsoft 365 apps at home (with your personal Microsoft account), see Copilot in Microsoft 365 apps for home: your data and privacy.

Microsoft 365 Copilot leverages the latest information from the web and your available work data to generate responses based on the modes you use. While AI generates these responses and strives for accuracy, it can occasionally make mistakes. To help you verify the information, Copilot provides references for where the information originated.

If you find an answer is incorrect or if you encounter harmful or inappropriate content, please provide feedback by selecting the Thumbs Down icon and describing the issue in detail.

As AI is poised to transform our lives, we must collectively define new rules, norms, and practices for the use and impact of this technology. Microsoft has been on a Responsible AI journey since 2017, when we defined our principles and approach to ensuring this technology is used in a way that is driven by ethical principles that put people first. Read more about Microsoft's framework for building AI systems responsibly, the ethical principles that guide us, and the tooling and capabilities we've created to help customers use AI responsibly.

Microsoft 365 Copilot in Word and other apps can help you carry out specific goals and tasks. For example, Copilot in Word can help you create drafts, summarize a document, and more.

Copilot Chat within Microsoft 365 Copilot helps you ground your prompts in work and web data.

You can write your own prompts to get data summaries, brainstorm new content, and get quick answers (for example, about the location of files). You can add new info and detail to the prompt and try again if you want more or less than the results Copilot provides.

You can learn a lot more about prompts by going to the prompting toolkit.

When you enter prompts using Copilot, the information contained in the prompt, the data retrieved, and the generated response remains within your Microsoft 365 tenant, in keeping with our current privacy, security, and compliance commitments.

Actions that you perform on a prompt after sending it to Copilot, like prompt saving, will store a new version of that prompt for your individual use.

You can access your saved prompts in Copilot by selecting the Prompt Gallery. To learn how to save, delete, or manage your saved prompts, see How to save prompts.

All this data is stored compliantly and adheres to Microsoft 365’s general privacy, security, and compliance commitments. For more information, see Data, Privacy, and Security for Microsoft 365 Copilot.

When you start a new Copilot chat, Copilot will automatically generate a chat title to display in your chat history list. This is to make it easier to find chats you've had with Copilot in the past.

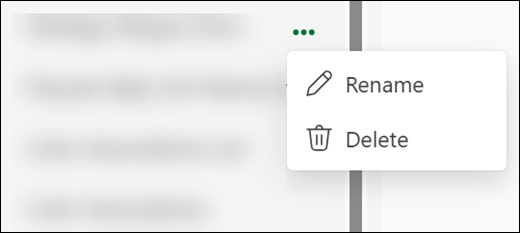

You can change the generated title by selecting the more options button

Copilot supports a wide range of languages. For the latest information on languages that have been tested and validated for all Copilot apps, see Supported languages for Microsoft 365 Copilot.

You can also try other languages with Copilot Chat though results may vary. If you try another language, please use the Thumbs Up or Thumbs Down feedback controls to let us know how your experience was.

When you upload an image for use in image generation, Copilot processes it using an AI model to help fulfill your request. This includes processing of the full image, including visual elements such as objects, background, and style contained therein. If the image includes people, this analysis may include their physical and facial characteristics. For example, depending on your request, the model may assess how many individuals are in the image, group individuals, alter the background, or add new elements. While the model may, in certain circumstances, spot well-known public figures, the AI model cannot otherwise identify unique individuals.

Enterprise customers are responsible for ensuring they have all appropriate rights and necessary permissions to use any uploaded images, including the permission of all individuals provided.

For AI generated images created within Microsoft 365 Copilot, we have implemented content credentials, provenance based on the C2PA standard, to help people identify whether images were edited or generated with AI. Provenance metadata can be viewed on the Content Credentials site.