Student support spotlight cards in Education Insights

Applies To

Microsoft Teams Microsoft Teams for EducationAI-based Student support spotlight cards are designed to help educators differentiate attention to support students before they fall behind. This card uses a machine learning to monitor the digital engagement patterns of the classroom as well as each individual student and notify educators when students show early signs of disengagement. The spotlight card provides a list of students who may need educator support in the following week, along with the specific talking points based on the students change in activity. Predictions are purely formative and rely only on digital engagement signals available in Education Insights, no additional data is collected.

How should educators use the spotlight card?

As the educator, you know and understand your students best. This spotlight is designed to shine a light on student learning and engagement to aid educators in differentiating support to empower their students equitably.

This tool is meant to be used in combination with personal relationships and understanding of the student’s abilities and circumstance. Spotlights do not evaluate students, but rather provide opportunities for educators to build upon their existing relationships and differentiate support.

Important: Some students who need support may be demonstrating their need with consistent inactivity. Students who are consistently inactive will not be highlighted in the student support card, as they have not provided activity data to interpret. Please pay close attention to the Activity spotlight card to identify students who are inactive, as that is another indicator that students need support.

Research for the student support card

The consensus in the pedagogical research community is that decline in engagement is an indicator that students are experiencing challenges and face increased risk of falling behind (Christenson, Reschly and Wylie, 2012;), and that students’ digital engagement data can be used to assess their level of engagement and to predict with high accuracy future behaviors and achievements. Additionally, this data can be used to identify “at-risk” students, as it is highly correlated with academic achievements (Asarta and Schmidt, 2013; Baradwaj, Brijesh Kumar, and Saurabh Pal., 2012; Beck, 2004; Campbell et al., 2006; Goldstein and Katz, 2005; Johnson, 2005; Michinov et al., 2011; Morris et al., 2005; Qu and Johnson, 2005; Rafaeli and Ravid, 1997; Wang and Newlin, 2002; You, 2016;).

Research also shows that early intervention helps mitigate that risk. There is evidence that a high percentage of at-risk students send distress signals long before they actually drop out of school (Neild, Balfanz, and Herzog, 2007). For this reason, early-warning systems are helping educators prevent students from falling off the track to graduation and to target interventions and support to students who need them most (Pinkus, 2008).

Asarta, C. J., & Schmidt, J. R. (2013). Access patterns of online materials in a blended course. Decision Sciences Journal of Innovative Education, 11(1), 107-123.

Baradwaj, B. K., & Pal, S. (2012). Mining educational data to analyze students' performance. arXiv preprint arXiv:1201.3417.

Beck, J. E. (2004, August). Using response times to model student disengagement. In Proceedings of the ITS2004 Workshop on Social and Emotional Intelligence in Learning Environments (Vol. 20, No. 2004, pp. 88-95).

Campbell, J. P., Finnegan, C., & Collins, B. (2006, July). Academic analytics: Using the CMS as an early warning system. In WebCT impact conference.

Christenson, S. L., Reschly, A. L., & Wylie, C. (Eds.). (2012). Handbook of research on student engagement. Springer Science & Business Media.

Goldstein, P. J., & Katz, R. N. (2005). Academic analytics: The uses of management information and technology in higher education (Vol. 8, No. 1, pp. 1-12). Educause.

Johnson, G. M. (2005). Student alienation, academic achievement, and WebCT use. Journal of Educational Technology & Society, 8(2), 179-189.

Michinov, N., Brunot, S., Le Bohec, O., Juhel, J., & Delaval, M. (2011). Procrastination, participation, and performance in online learning environments. Computers & Education, 56(1), 243-252.

Morris, L. V., Finnegan, C., & Wu, S. S. (2005). Tracking student behavior, persistence, and achievement in online courses. The Internet and Higher Education, 8(3), 221-231.

Neild, R. C., Balfanz, R., & Herzog, L. (2007). An early warning system. Educational leadership, 65(2), 28-33.

Pinkus, L. (2008). Using early-warning data to improve graduation rates: Closing cracks in the education system. Washington, DC: Alliance for Excellent Education.

Qu, L., & Johnson, W. L. (2005, May). Detecting the learner's motivational states in an interactive learning environment. In Proceedings of the 2005 conference on artificial intelligence in education: Supporting learning through intelligent and socially informed technology (pp. 547-554).

Rafaeli, S., & Ravid, G. (1997). Online, web-based learning environment for an information systems course: Access logs, linearity and performance. In Proc. Inf. Syst. Educ. Conf (Vol. 97, pp. 92-99).

Wang, A. Y., & Newlin, M. H. (2002). Predictors of web-student performance: The role of self-efficacy and reasons for taking an on-line class. Computers in human behavior, 18(2), 151-163.

You, J. W. (2016). Identifying significant indicators using LMS data to predict course achievement in online learning. The Internet and Higher Education, 29, 23-30.

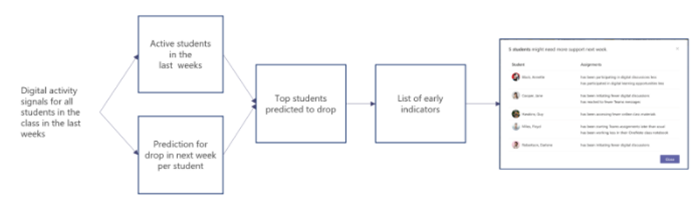

Prediction of student decline in activity

The machine learning model takes the digital activity patterns of each individual student over the past three weeks and use that data to identify students that are active today but have shown early indicators that their engagement level may drop in the coming week. The model takes into consideration the specific learning patterns of the classroom ecosystem, as well as accounting for gaps in class activity resulting from vacations and holidays. The prediction of student engagement is individual, with the understanding that different students may exhibit different activity patterns and there is no such thing as “normal” behavior. These student support spotlights do not evaluate students, but rather identify activity patterns which are significant enough to indicate potential need for support and empower educators to intervene early.

The activity signals used as input for the model include:

-

SharePoint file access patterns: Open, Modify, Download, Upload

-

Assignments and Submission access patterns: assign, open, turn-in

-

Class chat participation: visit, post, reply, expand, react

-

Class meetings participation

-

OneNote class notebook pages access: edit, Reflect usage, post

Important: the model uses activity and not the content itself. For example, it does NOT use content from chat messages, content of documents, Reflect emotions, or anything that could be used to identify that student.

Talking points

The model identifies up to 15% of students in the class who have demonstrated concerning activity signals, then highlights the indicators that each student displayed in Talking points. When you select the student support spotlight card, the students who have shown early indicators of disengagement will be listed alongside Talking points designed to help you initiate a conversation about that student’s support needs.

Talking points you may see on Student support spotlight cards include:

-

has been participating in digital discussions less

-

has been initiating fewer digital discussions

-

has reacted to fewer Teams messages

-

has participated in digital learning opportunities less

-

has been accessing fewer online class materials

-

has been starting Teams assignments later than usual

-

has been working less in their OneNote class notebook

Privacy and responsible AI

At Microsoft we care deeply about privacy and ethical use of AI. Therefore, the following privacy principles are embedded in the model:

-

The model is trained using an eyes-off manner, meaning our data scientists do not have access to view the class data.

-

We only share Insights about students with individuals who already have access to the underlying data and have personal familiarity with the student. i.e. the class educator.

-

The model will never profile a student as ‘good’ or ‘bad’. We aim to support the educator in making informed decisions about their students by sharing objective observations of data in a non-judgmental manner.

-

The model is intentional about avoiding bias and does not use any identifying information (such as name, gender, or race). The model uses only behavioral information from students' interactions in Teams.

-

The prediction is purely formative, meaning it is designed to alert educators and support them in modifying their practice to benefit their students, but is not saved within the Insights database for future review. It is a reflection of behavior at a specific point in time and should not be used for official assessment of any student.

Model limitations

-

The model examines one class at a time. If a student’s activity pattern has declined in one class and inclined in another, educators may be notified of the need for support in only the class with declined activity.

-

The model only uses digital engagement through Teams as a measure. Direct communication from student to educator, between students, or outside of Teams is not considered. Digital activity outside of Teams will not be represented in the model.

-

To allow for nuanced calculation of learning opportunities the prediction will only be performed for classes with more than 5 students, at least 4 weeks of digital activity and at least 30% student participation in one or more of the digital activities used by the model.